You are currently browsing the tag archive for the ‘United States’ tag.

The last time Jerry Seinfeld caught so much stick was in 2015 when he threw in the towel on comedy appearances at American universities.

Going back to the 1990s, Seinfeld was my favourite sitcom, even though, living in the UK at the time, I had to depend on the vagaries of BBC2’s late night/early morning schedule when the show was supposed to be shown around 12:50 a.m. — and was often replaced by something else. Arrgh!

Returning to the present day, Seinfeld’s recent comedy show for Netflix, Unfrosted, tells the story of the 1960s breakfast sensation, PopTarts. Reviews on both sides of the Atlantic say that it is not very funny. I cannot say, as I do not subscribe to Netflix.

Seinfeld also went back on his resolve on no longer appearing at university venues, having shown up a few days ago at Duke University to deliver the commencement address. He was heckled and booed. A number of students walked out on him.

I do not understand why people object to Jerry Seinfeld as a person. Even when his sitcom swept ratings charts in the United States, most viewers got the impression that he was just a normal, middle-class sort of man. His was always the voice of common sense in the show as his friends temporarily went off the deep end over amusing, everyday situations gone bad.

On Thursday, May 16, 2024, The Telegraph piled in on Seinfeld, reportedly a billionaire with a large collection of motor cars (emphases mine):

American universities have a habit of inviting a celebrity or leading figure in their field to deliver a commencement address to their students as they graduate, and, on paper, few are more distinguished than Seinfeld, one of comedy’s few billionaires whose achievements are unmatched within his field.

Yet, as he was about to deliver his speech, dozens of students simply walked out, booing loudly as they departed. Others remained, but unfurled ‘Free Palestine’ flags, and hurled abuse at the comedian as he delivered his address. Amidst cries of “Disclose, divest, we will not stop, we will not rest”, Seinfeld – who, one imagines, had seldom had such a vitriolic reaction since his early days on the comedy circuit – delivered a would-be inspirational speech in which he urged his listeners to work hard, listen to those wiser than themselves and find someone to grow old with.

For Seinfeld, merely showing up is not an option:

“Whatever you’re doing, I don’t care if it’s your job, your hobby, a relationship, getting a reservation at M Sushi, make an effort,” he said. “Just pure, stupid, no-real-idea-what-I’m-doing-here effort. Effort always yields a positive value, even if the outcome of the effort is absolute failure of the desired result. This is a rule of life. Just swing the bat and pray is not a bad approach to a lot of things.”

The students were upset that, as a Jew, Seinfeld stands with Israel. What else would they expect?

In a recent interview, he claimed that “the struggle of being Jewish is somewhat ancient…[there have been] thousands of years of struggle, and Israel is the latest one…antisemitism seems to be rekindling in some areas.” He visited the country at the end of last year, and expressed his support for them in the current situation, telling the Times of Israel that he would “always stand with Israel and the Jewish people”; something guaranteed to infuriate the pro-Palestinian sector of America’s student population. Hence the rather less than welcoming response at Duke, where, ironically, two of Seinfeld’s own children studied …

… In any case, he could hardly have been surprised by the reaction, given that, as far back as 2015, Seinfeld had advised his fellow comics not to perform at university campuses …

Seinfeld has not changed his mind about the comedic dearth English-speaking countries are experiencing:

… in widely reported remarks that he made on the New Yorker Radio Hour podcast, the comedian also declared that the recent downturn in scripted comedy was “the result of the extreme left and PC crap, and people worrying so much about offending other people.”

Warming to his theme, Seinfeld declared that “When you write a script, and it goes into four or five different hands, committee groups – ‘Here’s our thought about this joke’ – well, that’s the end of your comedy.”

He’s only telling the truth, isn’t he?

He says that stand-up comedy affords a certain amount of freedom for the performer:

In the same podcast, Seinfeld said that he felt a degree of freedom in being a stand-up comedian rather than a creator of a show such as his eponymous sitcom. “With certain comedians now, people are having fun with them stepping over the line, and us all laughing about it,” he said. “But again, it’s the stand-ups that really have the freedom to do it because no one else gets the blame if it doesn’t go down well. He or she can take all the blame [themselves].”

The reason I enjoyed Seinfeld so much was that it was as if I were watching four real-life New Yorkers rather than a set of actors playing roles. I also enjoyed creator Larry (Curb Your Enthusiasm) David’s description of it as ‘a show about nothing’:

One of the mantras of the sitcom Seinfeld was “no hugging, no learning”; the characters in it were believably flawed, and stayed that way, and, in contrast to the far more touchy-feely likes of Friends and (eventually) Frasier, there was to be no moment where the show’s protagonists learnt to become better people.

Well, that also portrays an eternal truth about human nature: most people do not change. Change comes with television land. Furthermore, whatever lessons are learned at the end of one week’s show are forgotten by the next. Without those flaws, there is no show — and no comedy.

The Telegraph has a go at Seinfeld’s choices in life which have made him a rich man seeking out obscure comedy clubs to ply his trade. Again, I fail to see what is wrong with that:

… the problem remains that Seinfeld himself now looks hopelessly out of touch, sequestering himself away in vast apartments in Manhattan and mansions in the Hamptons, where he keeps a classic car collection of over 150 vehicles that is said to be worth over £80 million.

In many regards, Seinfeld’s post-sitcom career has been the exact opposite of his near-contemporary Kelsey Grammer, who has struggled to find a role as iconic as that of Frasier Crane. Seinfeld could hardly be described as reclusive, but he has seldom acted in others’ projects, save for self-mocking cameos as himself, and his appearance in Unfrosted is notable for being the first time that he has portrayed another (non-animated) character on-screen in a leading role.

Instead, what seems to drive him is spending his days writing jokes, which he then performs at unassuming comedy clubs: a process detailed in his 2002 documentary Comedian, which was released shortly after his sitcom came to an end in 1998. The film may have one of the funniest trailers ever made, but, perhaps unwittingly, it’s also an acute glimpse into the psyche of a man who wants to have his cake and eat it.

Seinfeld claims to value the cut and thrust of being on stage in front of audiences who treat him the same way as any other performer, but no other performers in such venues have the luxuries of private jets, multiple expensive residences all over the world – complete, if the rumours are accurate, with a $17,000 coffee maker – and well-paid PR staff on hand to flatter his considerable ego at all times.

I do not know how old the author of The Telegraph article is, but those who read about Seinfeld during the years when Seinfeld aired knew that he was always a private person. Furthermore, why would people care about what he does with his money? If he were more left-wing in his views, would The Telegraph suddenly love him? It would seem so.

The article concludes, laying out Jerry Seinfeld’s many — no doubt inherent — middle class characteristics:

One of the reasons why the Duke appearance may have been so upsetting for Seinfeld is that he is, in many regards, identical to the ‘Jerry’ persona that he displayed in his sitcom for over a decade: uptight, misanthropic and cynical. For someone who could, theoretically, been at the top of the A-list, he is a curiously detached presence in the entertainment industry.

He never wanted to be at the top of the A-list, though, and he made that clear in the 1990s. He is being true to himself. I disagree that he is ‘misanthropic and cynical’. As for being ‘uptight’, so are most middle class men and women. We care about hygiene, like Jerry did in the sitcom. We care about fairness and objectivity, ways that Jerry encouraged Elaine and George to adopt rather than navel-gaze.

Fortunately, The Telegraph article states just how successful Seinfeld continues to be, living long past its 1998 expiry date:

That Seinfeld was one of the great comedic innovators, performers and writers of the past half-century cannot be repeated enough. Many of the catchphrases from it – “No soup for you!” “Not that there’s anything wrong with that” – long ago entered the common lexicon and are cited and quoted by TikTok users who were not even born when the show ended.

The man whose sitcom was bought by Netflix for $500 million in 2021 remains big business …

Good for him. He’s done most things right without making his life an open book in the media.

These were his parting words to the Duke graduates:

“I need to tell you as a comedian, do not lose your sense of humour,” Seinfeld said. “You can have no idea at this point in your life how much you’re going to need it to get through …”

That is so true.

Jerry Seinfeld is a true product of the middle class. What is so wrong with that?

Many of us think that Easter is but one day.

There we would be mistaken. Eastertide runs all the way to Pentecost Sunday, which comes 50 days later. Sunday Lectionary readings continue to point us to the holy mystery of Christ’s resurrection and the promise of eternal bodily resurrection on the Last Day.

On Easter Day, a number of articles appeared in the press discussing the most important feast in the Church calendar. If Christ had not risen from the dead, then our hope as Christians is in vain.

Christ’s disciples did not understand or believe that He would actually rise from the dead on the third day. It was incomprehensible to them, even though Jesus had said this would happen. Furthermore, He raised his good friend Lazarus from the dead several days beforehand. The Critic explored this in light of Mark’s Gospel, ‘This vision glorious’, concerning the women who found our Lord’s tomb empty (emphases mine):

And they went out quickly, and fled from the sepulchre; for they trembled and were amazed: neither said they any thing to any man; for they were afraid (Mark 16:8)

This is the description in Saint Mark’s Gospel of the response of the women at the empty Tomb on the first Easter Day. It is, scholars think, the earliest of the four Gospel accounts of the Resurrection of Jesus Christ. We might think that it lacks Easter joy. “Fled … trembled … amazed … afraid”: these are not words that immediately come to mind when wishing someone a “Happy Easter”. Indeed, the fact that these women were initially silent in the face of the empty Tomb — and, for good measure, an angelic vision declaring “he is risen; he is not here” — overturns any assumption that the Resurrection of Jesus was received as a straightforward “all is good, no need to worry” affirmation.

As we realise when reading Saint Mark’s account of the Resurrection of Jesus alongside those in the other gospels, there is nothing straightforward, easily comprehended about the Resurrection. The accounts by the four Evangelists do not at all neatly, comfortably sit beside each other. The timelines, the characters, the events cannot be straightforwardly pieced together, as if we were watching the concluding episode of a television series, or reading the final chapter of an airport novel.

The various timelines, characters, and events in the accounts given of the Resurrection in the four Gospels are infinitely richer and more demanding. They are witnessing to and seeking to convey to us something of the explosion of divine presence, light, and life that occurred at that Tomb on the first Easter Day. Little wonder that the four Gospel accounts are anything but straightforward; little wonder that they can appear confused, even contradictory. Language, experience, recollection — all these are stretched far beyond what they can possibly contain on the first Easter Day. The One who is eternal Light and Life, the mighty Creator of all that is, touches and fills the Tomb with creative, life-giving power.

Neat, comfortable, easily comprehended accounts of the empty Tomb would utterly fail to convey the explosive outpouring of this creative, life-giving power. No straightforward affirmation, the Resurrection of Jesus brings us, with those women at the Tomb, to be silenced in awe and reverence before the revelation of God’s life-giving presence and saving purposes …

The current — and long-running — trend to see Christianity as a social justice project undermines the Resurrection:

There is little that quite so undermines the proclamation of the Resurrection of Jesus, the Easter faith, than regarding it as an affirmation of a political or cultural project. Neatly fitting the Resurrection into political or cultural visions, as a convenient, helpful prop, is to profoundly misunderstand (if not deny) the faith of Easter. It is to entirely set aside Saint Mark’s account of the reaction of the women at the empty Tomb, rendering their reaction unnecessary and inappropriate rather than the authentic witness to God’s presence and act in the Resurrection.

Let us reflect on this, not just on Easter, which seems an eternity ago for some, but during the rest of Eastertide:

… let us heed the response of the women at the empty Tomb, recognising in that response the witness to the out-pouring of Eternal Light and Life, bringing to humanity — broken, confused, and foolish as we are — participation in the Resurrection life, anticipated now and having its fullness in the life of the world to come …

May Easter Day renew us — amidst whatever tombs, whatever defeats and failures and fears we know — in this enduring hope, this vision glorious.

Another theme which runs from the Crucifixion through to the Resurrection is that of forgiveness, which is so difficult. It can be for me, anyway, particularly in serious situations when people who know how to help have been unhelpful.

It is easier to hold on to grudges against such people than it is to forgive them.

Another article in The Critic, ‘Try Christianity’, explores our difficulty in forgiving others, something that Jesus did so readily, yet He suffered much more hurt than we do.

Let’s start with apologies, something else few of us do:

… the pen of P. G. Wodehouse still manages to express a multitude of sentiments from the pews. On this occasion I’m thinking specifically of a line from The Man Upstairs: “It is a good rule of life never to apologise. The right sort of people don’t want apologies, and the wrong sort of people want to take a mean advantage of them.” In his narration, Wodehouse has summed up how many Anglicans, perhaps even many English Christians, think about God, sin, confession and forgiveness.

While Wodehouse has a point, I would venture that his view on apologies pertains to most people, not just English Christians.

Furthermore, our reluctance to forgive varies among cultures. For some, the mantra is, ‘Don’t get mad, get even’.

The article points us, using the words of the 1662 Book of Common Prayer in the first paragraph below, to our Lord’s example that we remember on Good Friday:

… we are confronted by this God-Man who allows himself to be vulnerable, who confidently demands contrition, and whose property is always to have mercy …

Many of us still believe and act on the conviction that contrition and forgiveness is really rather complicated and perhaps should be avoided. Or that it can only be extended when the one wronged has returned to a position of power and the enfeebled supplicant comes begging. Examples are superfluous here — you will know when your hackles are raised by injustice or snobbery or idiocy.

The quality of mercy is so alien to the wounded creature that it simply must be a miracle. Today that quality is one which we see in the most maligned of persons, the Man of Nazareth, hanging on the cross. “A man of sorrows”, Isaiah called him, “acquainted with grief — despised and rejected.” When soldiers struck and mocked him he returned “Father, forgive them.” When the thief next to him asked for clemency, he granted it.

Even when we assent to a conceptual understanding of Christian forgiveness we qualify it. As Cosimo de Medici wryly put it, “We read that we ought to forgive our enemies; but we do not read that we ought to forgive our friends.” However, Jesus’ business on earth was not finished until he had assured his friend Peter, the one who denied him, of his consistency.

Today we remember that Jesus of Nazareth decided that forgiveness was worth dying for. And his life and death stand as an example and challenge to us still.

Well, one would not have seen either of these two themes in the media between Good Friday and Easter, March 29 and March 31, 2024.

A third article in The Critic examined the BBC’s online headlines on March 29:

… it is Good Friday, and the front page of the BBC website appears to have precisely no references to the occasion. The “culture” section contains articles about Beyoncé, the Oscars (that holy ceremony!), Godzilla x Kong and “What we know about the accusations against Diddy”. Stirring stuff.

Buried deep on the site’s “Topics” section is a “Religion” page. Recent articles include “Rastafarian faith mentor dies, aged 73” (RIP to him) and “UK’s first Turkish mosque faces threat to its future”. Nothing about Easter — though there is a guide to celebrating Holi, which is nice.

A fourth article in The Critic points the finger of blame at the established Church for promoting social justice ideology, ‘The Church of England is practising a secular religion’:

Church attendance is of course declining. One in five worshippers has disappeared since 2019 alone. Is the Church of England spending more and more money on dubious forms of “anti-racism” under the delusion that it will attract young leftists to its services on Sundays? Or perhaps this quasi-theological endeavour is just a more winnable cause than encouraging religious belief and practice. Justin Welby cannot fill his churches but he can fill his heart with a sense of righteousness.

This isn’t good enough — not for anyone. An obsessive interest in the sacred values of equality diversity and inclusion can distract believers from the divine, but it also threatens the social functions of the Church of England. The Church is one of the last major foundations of tradition left in the United Kingdom, along with the monarchy. The identitarian left has been tearing at the stitches holding us together for a number of years. To imitate its most fanatical tendencies is to encourage divisiveness rather than inclusion.

The Church of England should stop enabling these phenomena. Granted, to place the blame for its diminished status entirely on “woke Welby” would be naive. The problem predates the current Archbishop of Canterbury. A Telegraph analysis shows that church attendance has more than halved since 1987. However, the embrace of secular religion is exacerbating rather than ameliorating its decline.

This year, the Easter services at Canterbury Cathedral featured the Lord’s Prayer recited in Urdu or Swahili, led by native speakers of those languages. On the face of it, it’s something inclusive. Yet, people in every non-English speaking country recite the Lord’s Prayer in their own tongues. When, on holiday, I used to attend services at the Reformed Church of France, I joined everyone in reciting it in French. Therefore, what’s the big deal?

The Telegraph covered the story (as did GB News) in ‘Canterbury Cathedral reads Lord’s Prayer in Urdu and Swahili during Easter service’:

At the 10am service shown on the BBC, The Very Rev Dr David Monteith, Dean of Canterbury Cathedral, invited each member of the congregation to say the Lord’s Prayer in their own language, while it was led in Urdu on the microphone by a member from Pakistan. The subtitles on the screen were in English.

At an earlier service, aired on Radio 4, the prayer was led in Swahili.

The Dean said: “We invite congregations to say the Lord’s Prayer in their own first language at most of our communion services …

“From time to time, we invite someone to lead in their preferred language of prayer – today it’s in Congo Swahili as he was ordained in Zaire, and by a member of the Community of St Anselm from Pakistan …”

Then came Justin Welby’s sermon, which had nothing to do with the Resurrection, the core tenet of the Christian faith:

Shortly after the Lord’s Prayer was said, the Most Rev Justin Welby, the Archbishop of Canterbury, used his Easter sermon at the cathedral to condemn “the evil of people smugglers” in the wake of a row over the Clapham chemical attacker being granted asylum.

The article also points out:

Several Church of England dioceses faced backlash after appointing individuals or teams to address racial inequality in their regions amid concerns they would alienate ordinary worshippers.

However, dissent is also present elsewhere in the world. Anglican church groupings outside the UK are at odds with Welby:

The Archbishop has been struggling to unite the Anglican Communion because of the row on same-sex blessings.

The conservative Global South Fellowship of Anglican Churches (GSFA), which represents churches on every continent and the majority of Anglicans worldwide, has previously said that it expects the organisation to “formally disassociate” from both the Archbishop of Canterbury and the Church of England.

However, it was not only Justin Welby pulling the identity politics strings. In the United States, Joe Biden’s administration declared Easter Sunday, of all days, Trans Visibility Day.

And here I thought that Joe Biden was a Catholic.

The Telegraph had an article on the story, ‘Joe Biden has betrayed Christian America’. The most telling sentence was this one:

And certainly he had dozens of other dates on the transgender awareness calendar, including a whole week in November, he could have chosen instead.

Returning to the UK, on April 3, The Telegraph‘s Madeline Grant wrote about Richard Dawkins having his cake and eating it in ‘Christianity’s decline has unleashed terrible new gods’:

… Professor Dawkins’ admission that he considers himself a “cultural Christian”, who is, at the very least, ambivalent about Anglicanism’s decline is an undeniably contradictory position for a man who in the past campaigned relentlessly against any role for Christianity in public life, railing against faith schools and charitable status for churches.

Before we start preparing the baptismal font, it’s worth noting that Dawkins says he remains “happy” with the UK’s declining Christian faith, and that those beliefs are “nonsense”. But he also says that he enjoys living in a Christian society. This betrays a certain level of cultural free-riding. The survival of society’s Christian undercurrent depends on others buying into the “nonsense” even if he doesn’t.

Grant gives us an example of the ‘terrible new gods’ — Scotland’s new Hate Crime Act which came into force on April 1:

By the New Atheist logic, it ought to be the most rational place in the UK since de-Christianisation has occurred there at a faster rate. Membership of the national Church of Scotland has fallen by 35 per cent in 10 years and the Scottish Churches Trust warns that 700 Christian places of worship will probably close in the next few years. A Scottish friend recently explained that every place where he’d come to faith – where he was christened, where his father was buried – had been shut or sold. This is not only a national tragedy, but a personal one.

New Atheism assumed that, as people abandoned Christianity they would embrace a sort of enlightened, secular position. The death of Christian Scotland shows this was wrong. Faith there has been replaced by derangement and the birthplace of the Scottish enlightenment – which rose out of Christian principles – now worships intolerant new gods.

The SNP’s draconian hate crime legislation is a totemic example. Merely stating facts of biology might earn you a visit from the Scottish police. But perhaps Christianity has shaped even this. It cannot be a coincidence that Scotland, home of John Knox, is now at the forefront of the denigration of women. The SNP’s new blasphemy laws are just the latest blast of that trumpet …

… Much of what atheists ascribed to vague concepts of “reason” emerged out of the faith which informed the West’s intellectual, moral, and, yes, scientific life – a cultural oxygen we breathe but never see …

… The world isn’t morally neutral, and never has been.

Recognising Christianity’s cultural impact is the first step. The bigger task facing the West is living out these values in an age when they are increasingly under threat.

On Easter Day, The Telegraph‘s Tim Stanley, an agnostic turned Roman Catholic, wrote about the horror of what assisted dying — euthanasia — legislation could bring to the UK. At the end, he had this to say about the impact that widespread unbelief has had on Holy Week and Easter:

Christ died on Good Friday, but for much of the zeitgeist he has never risen again, setting the context for this debate that is minus the hope that once brightened the lives of Westerners even in war or plague.

I thank God I am a Christian. I would have to fake it if I weren’t. In an atheistic culture, beyond the here and now, there is little to live for – and when the here and now become unbearable, nowhere to turn but death.

It is up to us as individuals, with or without the help of the Church and the media, to keep the spirit of forgiveness and the hope of bodily resurrection alive. How do we do that? By studying the Bible, verse by verse.

It has become tiring to constantly — so it seems — hear and read that the Conservatives have plunged the UK into a cost of living crisis and that we are the only people on earth experiencing such a dire situation.

Anyone who knows a second European language will know that EU countries are also experiencing similar hard times.

Interestingly enough, so are the middle class from across the pond whom The Guardian interviewed for a feature published on April 9, 2024, ‘”I run out of money each month”: the Americans borrowing to cover daily expenses’. Five people’s stories are included. Three of them follow.

Keep in mind this is happening under a sainted Democrat administration, that of Joe Biden.

Most of the people interviewed rely on credit cards to get them through the month. The credit card debt is piling up in many cases.

Inflation, particularly where groceries are concerned, is a huge problem. However, health care expenses are an even bigger worry.

We discover that:

US consumer borrowing rose by $14.1bn in February, driven by the largest increase in credit card balances in three months. Analysts believe that the soaring cost of consumer debt for US households could affect president Biden’s chances for re-election, as for the first time on record, interest payments on credit cards and car loans are as big a financial burden for Americans as their mortgage interest.

Respondents to an online callout about personal levels of consumer borrowing in the US included adults of all ages and from areas across the country, with most saying they were now struggling to repay their debts.

Robin, 70, a retired arts teacher in California, depends on an annual Social Security income of $14,000. She said that she is used to being frugal but recent price hikes are defeating her best efforts at economising:

I became disabled and retired about 20 years ago …

Now, because prices have gone up dramatically – for bread, gas, everything – I run out of money each month, so I end up paying for necessities with my credit card. This has been going on for at least six months. I’m so worried. How will I ever pay this back when everything costs more and more?

I can usually manage the regular things. I rent a room for $450. My monthly credit card payment is $100, I like to pay it down as fast as possible.

It’s the unexpected expenses I struggle with, and they happen all the time, don’t they: new car tyres, taking my dog to the vet. I owe $2,500 dollars and I chip away at it, but what if my rent goes up? What if my 1997 vehicle needs to be replaced?

If the Federal Reserve would lower the interest rates, I’d at least be able to pay less interest on my debts. It’s so stressful, and lots of people are in this situation.

However, the Federal Reserve has no plans to change its policy at the moment:

The Federal Reserve announced on Wednesday that it would leave US interest rates at 5.25% to 5.5% – a 25-year high – where they have been since July.

Donna from Oregon, 63, is an accountant who suffered a foot injury. She makes $50,000 a year. Her private insurance excess is $10,000 [Obamacare, another Democrat initiative], which she is trying to pay off for surgery she had on her foot. Even an accountant taking advantage of 0% credit cards can be defeated by soaring costs of goods and services:

The last couple of years, when inflation hit really hard, that’s when my credit card borrowing started, as I haven’t had a raise in three years.

I’m now playing the credit card swap game: large expenses such as car repairs go on a 0% card. I then carefully monitor the expiration date of that deal on a spreadsheet and eventually transfer the balance to a new 0% interest card …

Wages aren’t catching up with costs. Handymen charge $125 per hour now in my area. You can’t make $24 an hour and pay someone $125 an hour to do your maintenance. In 2008 I made $65,000 before I lost that job. How am I supposed to get by on 15% less than I was making 15 years ago?

She has had to dip into her retirement fund to make ends meet:

The 0% credit cards have been a godsend, but this year I’ve pulled $7,000 out of my retirement fund to pay off some of my debts, and over the past couple of years I’ve taken out 10% of my retirement pot to cover bills. This will be impossible to replenish.

The surgery cost $9,200, 25% of my net annual income of $36,112 after taxes and social security – no wonder I’ve had to live off credit cards and retirement savings!

It’s frightening to pull from my retirement fund just to get by, wondering how poor I’ll be when I finally do retire, if I’ll be able to maintain my home. If you’ve worked your whole life, it’s just not right.

Chris, 52, is a special education teacher who lives in Denver and works two jobs. He worries about the long term effect on his health, which he was already paying for previously. He:

racked up $47,000 in loans because of unaffordable healthcare bills and essential home repairs over the past three years.

In 2020 he was finally debt-free after paying off $54,000 since his divorce, he says, but then a $12,000 medical bill came in, two HVAC [air conditioning] units in his house needed replacing and some trees removing.

Chris borrowed $33,000, then took out another $17,000 loan to help repay the first.

He says:

… although I increased my salary from $65,000 to $125,000 last year by teaching summer school and additional classes. Denver is pretty expensive, and I pay child support, so I ended up working a second job, driving for a ride-share company, to fight my way out of this debt.

I drive all night over the weekend to make $1,000 extra a month, so sometimes I worry about my health. I hope I can keep this up for a few more months, I still owe $14,000. Most Americans I know have a second job.

A fourth interviewee, whose story features at the end, concluded by saying:

The American economy is so cut-throat that people are too scared to take annual leave. The rat race is toxic, and the American dream? It’s dead for the majority.

So, there you have it, my fellow Britons. Be grateful for the flawed NHS and the ability to take annual leave. The grass isn’t always greener on the other side.

One hundred years ago this week, on January 22, 1924, Ramsay MacDonald became Britain’s first Labour Prime Minister.

He was one of six so far. The others were Clement Attlee, Harold Wilson, James Callaghan, Tony Blair — and the Blair-appointed, unelected Gordon Brown.

Another centenary occurred this week: Lenin’s death and Stalin’s rise to power, but that’s another story.

1920s Labour

Ramsay MacDonald’s Labour Party was a mix of workers and intellectuals, with each group having its own political philosophy.

Historian David Torrance’s article for UnHerd, ‘Labour was never a revolutionary movement’, tells us that MacDonald had to manage the expectations of both while solving the nation’s problems (emphases mine):

… MacDonald himself could see the bigger picture. And his account of a historic day betrayed both his shock at what had just happened, as well as his apprehension at what was to come: “Without fuss, the firing of guns, the flying of new flags, the Labour Gov[ernmen]t has come in… Now for burdens & worries. Our greatest difficulties will be to get to work. Our purposes need preparation, & during preparation we shall appear to be doing nothing – and to our own people to be breaking our pledges.”

This was prescient. Over the next nine months Britain’s first Labour Prime Minister would find himself battling on a number of fronts: for acceptance by voters, co-operation with not one but two opposition parties in Parliament (Liberals and Conservatives) and most of all for support from his own colleagues, many of whom were deeply uneasy that a party with only 191 MPs had taken office at all.

The Labour Party as represented in Parliament was complex. Its structure betrayed its chaotic inception, more a fusion of local bodies and ideological factions than a combined party under a unitary authority. The civil servant Percy Grigg described a “Trade Union element”, which was more interested in moderate “bread and butter” politics than the abstract economic and social doctrine favoured by the party’s faction of “intellectuals”. And this second grouping included both former Liberals and those who had emerged from the Independent Labour Party (ILP), a separate but affiliated group. Grigg called the ILP-ers “Montagnards” after the most radical political group during the French Revolution, and remarked they followed “the lead of the Clyde in Scotland and Mr. [George] Lansbury in England”. Inclined to be unhappy at the moderation displayed by the trade unionists and intellectuals, the Montagnards would only be satisfied with the building of the New Jerusalem.

… After two months in government, Ramsay MacDonald — consumed by international as well as domestic affairs — grew concerned at the failure of his backbenchers to respond adequately to the “new conditions”. Some of what he called the “disappointed ones” had become “as hostile as though they were not of us”.

… MacDonald worked hard to make … housing legislation a reality, formally recognised the Soviet Union and brokered a new settlement between France and Germany, something he hoped would boost trade as well as soothing post-war wounds. But with only 191 MPs, and a ministry dependent on Liberal votes, he couldn’t do everything …

The rest of the MacDonald story is well known. His first administration lost a confidence vote in October 1924 …

An election was held and the Conservative MP Stanley Baldwin was returned to power.

Five years later, in June 1929, MacDonald became Prime Minister again:

of another minority government, although this time Labour was the single largest party with 287 seats. In the midst of rising unemployment and a sterling crisis, the government agreed to resign in August 1931, but then the King persuaded MacDonald to head a “National” government. Labour’s National Executive Committee voted to expel all those who remained in office, and in the election that followed, the National government was returned with a majority of nearly 500 (mostly Tories) and the “official” Labour Party was reduced to just 52 MPs.

As his was a National Government, the Cabinet had to comprise MPs from other political parties, not just Labour. Wikipedia says:

The National Government’s huge majority left MacDonald with the largest mandate ever won by a British Prime Minister at a democratic election, but MacDonald had only a small following of National Labour men in Parliament. He was ageing rapidly, and was increasingly a figurehead. In control of domestic policy were Conservatives Stanley Baldwin as Lord President and Neville Chamberlain the chancellor of the exchequer, together with National Liberal Walter Runciman at the Board of Trade.[88] …

MacDonald was deeply affected by the anger and bitterness caused by the fall of the Labour government. He continued to regard himself as a true Labour man, but the rupturing of virtually all his old friendships left him an isolated figure.

Welsh Labour

Most Britons connect Wales with Labour from its inception to the present day. They are not wrong, but the reality is more nuanced.

Professor Brad Evans from the University of Bath described the rise and decline of Welsh Labour in another UnHerd article, ‘How Labour lost the Welsh Valleys’:

Last year, I found myself back in the Rhondda valley and the village where I spent most of my childhood. As I walked through its typically inclement grey terraced streets, I came upon the boarded-up premises of the Ton and Pentre Labour and Progressive Club. Dereliction and a sense of decaying nobility are common features of the streets here, with clubs, institutes, chapels and all other sites of congregation looking the same. This vacant building that held many notable trade union meetings was one of the longest-running working men’s establishments in the Rhondda. Now it stands as a monument to failure. What can be said about such a relic, whose name alone testifies to everything this valley once stood for?

Because these former mining valleys in the wilds of Glamorgan were the cradle of working-class politics in Britain. Revolutionary socialism is almost as old as the mining communities in these hills: the red flag of socialism was flown for the very first time in anger over the skies of Merthyr Tydfil in 1831. And, in time, this people and landscape gave rise to the British labour movement and the party that bears its name, a party that knew who it represented and what it wanted to change.

Keir Hardie, Labour’s founding father, was elected MP for the Merthyr and Aberdare constituency only a few years before he would oversee the transition from the Independent Labour Party to the more familiar abbreviated title in 1906. A former miner who first entered the darkness of the pits at just 10-years old, he knew first-hand the toils and struggles faced by these hardened communities, and never forgot them in Parliament, wearing a deerstalker and tweed jacket in place of the expected top hat and tails. But Hardie himself never lived to see the party he conceived in power. An idealist and a pacifist, he died a broken man in 1915, as his contentious objections to the First World War (on the basis of the working-class dead and war profiteering) went unheeded.

Of Ramsay MacDonald, Evans writes:

Just nine years later though, and 100 years ago this week, the Labour Party did form its first government. It was led, though, by a Scot, Ramsay Macdonald, later expelled as a traitor and a turncoat for his collaboration with the Conservatives.

Even so, South Wales remained loyal to Labour:

as the Labour Party split and reformed, mutated and reorganised, South Wales remained its natural home. It was here that institutions later synonymous with the party, such as the National Union of Miners, were first established. And the area produced the radical autodidactic streak that gave Labour’s second prime minister, Clement At[t]lee, his greatest lieutenant: Nye Bevan gained his education in the libraries and reading rooms of Tredegar’s Miners’ institutes, arguably the most impressive educational bodies promoting the socialist cause anywhere in Europe.

Everything went well until one day in 1966, a mining disaster still remembered to this day:

… in the valleys of South Wales, a different tragedy bears a single name: Aberfan. That was the village where, on the morning of 21 October 1966, approximately 105,000 cubic metres of discarded coal waste slid into the community and engulfed the Pant Glas school and houses below. Half the town’s children were wiped out by the black avalanche that sped down the slopes, along with 28 adults.

I will add here that Queen Elizabeth II was so moved that she took an extraordinary step: she visited Aberfan in the wake of the tragedy to see what had happened and to converse with the townspeople. Monarchs had not been known for visiting the scenes of disasters outside of wartime.

Unfortunately, the people of Aberfan trusted Labour, and Labour broke that trust in the years that followed:

Aberfan had already been foretold through numerous warnings, and in previous slippages in both the village itself and nearby. But even as the slurry settled and the spoil began to be cleared, the story was only just beginning … John Collins, whose entire family was taken by the black mountain, later said: “I was tormented by the fact that the people I was seeking justice from were my people — a Labour government, a Labour council, a Labour nationalised coal board.” This Establishment cover-up was fatal. As veteran BBC broadcaster Vincent Kane later added: “Half a dozen or so organisations or individuals should have brought help to those stricken people, but instead they betrayed them.”

The central figure in this episode was Lord Robens who, as Chairman of the National Coal Board, continually presented himself as a defender of coal, while overseeing widespread pit closures. His arrogance was matched only by the lack of compassion he showed towards the families of the bereaved. Insult piled upon injury as the official tribunal into the cause of the disaster was marred by misinformation, delays and attempts to obstruct the truth. And the victims could see it — as a father of one of the bereaved children cried out when giving evidence: “Buried alive by the National Coal Board. That is what I want to see on record. That is the feeling of those present. Those are the words we want to go on the certificate”.

Worse was to follow: it was at the behest of the Labour Minister for Wales — the valleys-born George Thomas, later Lord Tonypandy — that the villagers themselves were required [to] pay to remove remaining slag heaps still towering over their homes from the disaster relief fund. But this tragedy was to become the epicentre of a far greater conjuncture, where Wales began to reckon with all the false promises of industrialisation and unionism.

In the decades that followed, the people of South Wales looked for something to replace Labour but there was nothing:

If there is no history of South Wales without Aberfan, there is no complete history of the Labour Party which does not chapter the devastation and fury it sowed in this heartland. And, while the latter part of the Sixties was marked by the beginnings of a turn away from Labour, the following decade saw nothing arrive to replace it. Voter turnout in elections started to decline, while industrial action by the miners threw the country into darkness, a faction of the working class seemingly at war with the country — and, after the 1974 election, at war with a Labour government too.

Even Neil Kinnock’s time as Labour leader could not restore the people’s confidence in the party:

A son of the valleys, the Tredegar-born Neil Kinnock, would later assume the helm as Margaret Thatcher instigated a very different kind of revolution. An impressive intellect and pragmatist, Kinnock’s accent and bearing connected the party back to its past. He could speak with genuine feeling of the strength of those communities, “who could work eight hours underground and then come up and play football”. But that would in part be his undoing: a conservative national press constantly mocked his “boyo” persona. And while he could give voice to South Wales through his own, it ultimately became the voice of political defeat, as the pits central to valley-life were shut and sealed, something the Labour Party proved powerless to stop …

Kinnock was probably the last leader of the Labour Party as it was originally conceived. But he was ultimately given the impossible task of trying to lead a party born in another age, a product of the dire need to have political representation to counter the exploitative nature of industrial capitalism. Now, it has lost its identity, just as much as the people it represented were losing theirs.

Tony Blair seemed to end all hope:

… as Blair opened more of the country to the punishing writ of the free market, the last vestiges of industry petered out. The last of the pits closed, and some of the most prominent local employers such as Hoover in Merthyr and the Polikoff’s and Burberry factories in the Rhondda, dramatically cut their workforce, until their inevitable closure a decade or so later.

Ironically, it was through Blair’s initiative of devolution, which gave Wales, Scotland and Northern Ireland their respective assemblies — some would say parliaments — that Plaid Cymru, the Welsh nationalist party, came to prominence:

With the National Assembly for Wales established in 1997 following devolution in the Principality, the strength of support for Plaid Cymru, which had been gathering momentum since Aberfan, was now evident in the valley heartlands. By 1999 they would even manage to win seats in the once unimaginable ward of the Rhondda, where the old joke ran that even a Smurf would win should they be dressed in red. Blair’s appeal to Middle England was traded in Wales, and the party barely scrapes ahead of the Conservatives nationally now.

Evans concludes:

Today, as the party seeks to commemorate 100 years of its electable “progress”, it should turn is attention the places which gave the party its name and yet where poverty and abandonment persist. While you can be sure that disdain for the Conservative Party is still strongly felt, the Labour Party is regarded with something equally dangerous: an apathetic rolling of the eyes by a people whose rolling hills speaks to the layered memories of resigned weathering. Even as another Keir looks set to return the Labour Party to power, Ton and Pentre Labour and Progressive Club won’t suddenly reopen. Suspended in neglect, its rooms will remain empty as another electoral season passes. And all the while, what passes for progress will continue arriving at these towns from elsewhere, just explanations that try and fail to make sense of the greatest dereliction — the dereliction of the mind and soul of a people.

Left wing versus Right wing economics

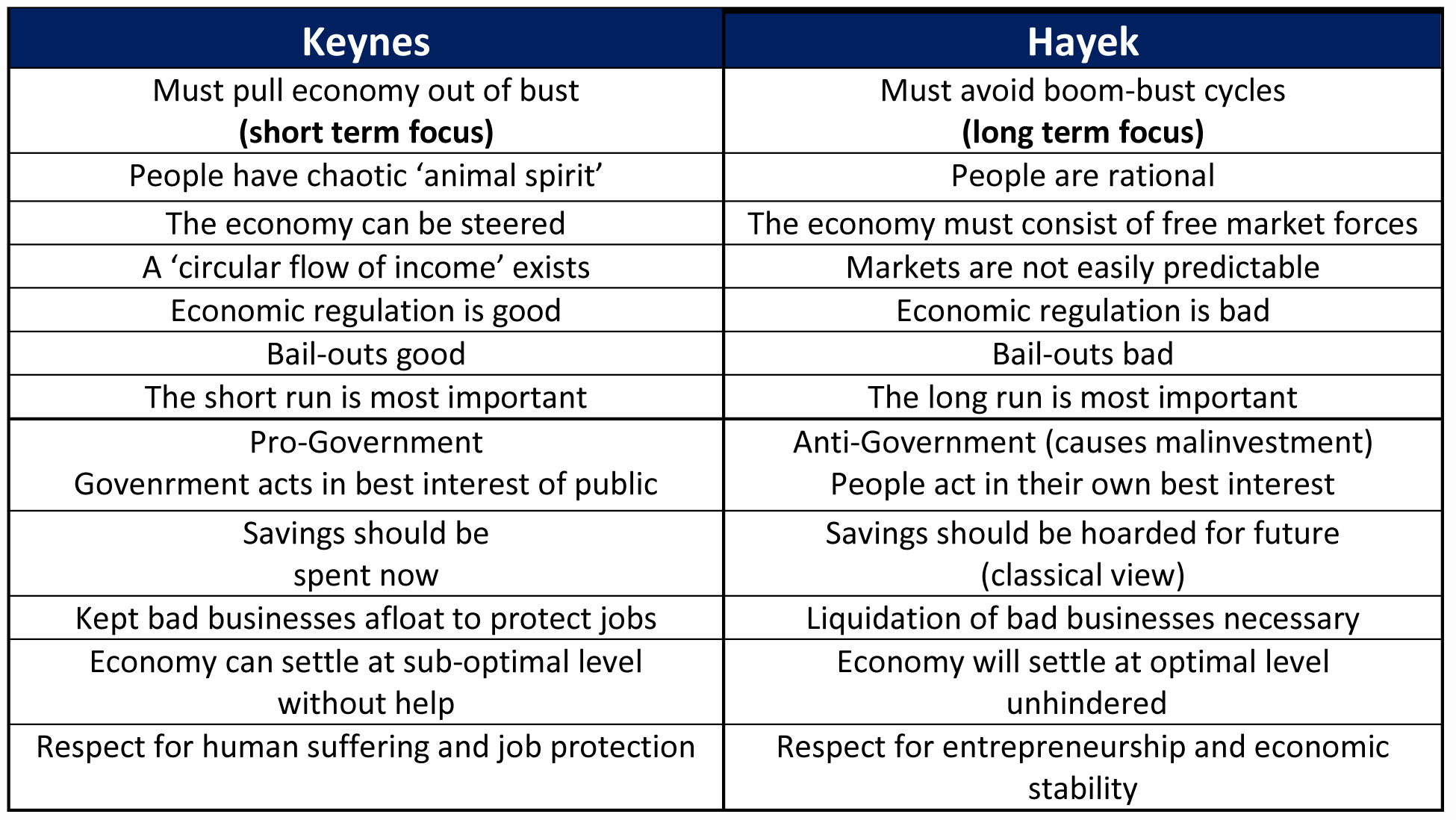

While John Maynard Keynes was a member of the Liberal Party, Labour have adopted a Keynesian approach to economics, particularly in contrast to that of Friedrich Hayek, as can be seen in the chart below (click here for a larger version):

Note Keynes’s advocacy of a short-term focus, the belief that people have an ‘animal spirit’ (irrationality), that bad businesses should be kept afloat and, perhaps most important of all, that government acts in the public’s best interest.

Hmm.

As a commenter to another UnHerd article, Tom McTague’s ‘Labour is stalked by treachery’, put it:

To the leftist, the good is the enemy of the perfect.

The article explains how one can arrive at that conclusion:

The conservative philosopher Maurice Cowling wrote that the essence of liberalism was the belief that “there can be a reconciliation of all difficulties and differences” in life (which he said was plainly false). In The Meaning of Conservatism, Roger Scruton argued that this was the liberal faith that lay at the heart of today’s “spirit of improvement” — the inclination of the liberal, as he put it, to “change whatever he cannot find better reason to retain”. Conservatives like Scruton and Cowling, by contrast, do not believe all difficulties and differences can be reconciled — or, in fact, should be. To govern is to weigh up competing goods and to make least worst choices based on incomplete information.

I couldn’t agree more.

Keir Starmer, who might well become the UK’s next Prime Minister, is known for his policy flip flops. He, too, operates on a ‘spirit of improvement’ and ‘change’ level. The problem is that you can’t please everyone:

In one obvious sense, he has already been tried and found guilty of betrayal by the Left for breaking the promises he made to secure the leadership. During the Labour leadership campaign, Starmer pledged to “reverse the Tories’ cuts to corporation tax” only to then order his MPs to vote against the Tories’ own decision to reverse their own cuts themselves. He also pledged to “defend free movement as we leave the EU”, only to then make keeping out of free movement a red line in any future relationship with the EU. But the fundamental challenge for Starmer is that it is impossible for him to reconcile all of the competing promises he has made to the wider electorate and so will, inevitably, betray someone …

Today, Labour’s economic plan remains markedly empty …

The obvious danger for a future Starmer government is that without an economic strategy, there won’t be enough money to build a new Britain or fix its crumbling public realm. Instead of doing all three of the missions Starmer set himself, he will not be able to do any. And so difficult choices will follow. Should this happen, it will not be long before the ghost of Ramsay MacDonald once again starts to haunt the Labour party.

Even behind the scenes, Labour prove themselves to be contradictory.

On January 3, Guido Fawkes pointed out Labour’s hypocrisy when it comes to using consultants:

The FT has dug into the numbers this morning and found that, despite Labour squawking about government use of consultants and promising “tough new rules” to restrict it, the party has massively increased its own work with companies. Labour got £287,000 in donations of staff time from consultancies in the year to September 2023, which is up four times from only £72,000 in 12 months before …

The biggest contribution of hours came from EY UK which provided £138,000-worth. Readers might remember [shadow chancellor] Rachel Reeves using her party conference speech in October to say Labour “will slash government consultancy spending, which has almost quadrupled in just six years”.

Leftist mindset

In March 2023, Will Lloyd, who works at the Labour-supporting New Statesman, wrote a fascinating article for The Times, ‘Yes, the left are as miserable as they seem’, in which he contrasts them with conservatives:

If you can count on the left for anything, it is earnest miserablism. Political movements are more than the sum of policy papers and press conferences. Over time they acquire their own characteristic idioms, gaits and dress codes. Beards are more common on the left than the right, for instance …

Joylessness, too, comes with this territory, a stereotype that has remarkably solid groundings in social science. Left-wing political views tend to shake hands with high neuroticism, which is associated in individuals with anxiety and overthinking, and in political movements with consistently blowing elections. In the US, a 2021 paper by Catherine Gimbrone, Lisa Bates, Seth Prins and Katherine Keyes entitled “The politics of depression: Diverging trends in internalising symptoms among US adolescents by political beliefs” took the emotional temperature of 12th-grade (year 13) students between 2005 and 2018. It found that liberal girls, with liberal boys following them, experienced surging rates of depressive symptoms. Conservative teenagers, perhaps inured to inequality or the climate crisis or the rise of populism by their beliefs, were not nearly so sad.

Were these distressed teenagers left-wing because they were miserable, or miserable because they were left-wing? The paper does not quite give an answer. I wonder if both can be simultaneously true. Misery, in any case, was tied to ideology.

Considering these findings, the economist Tyler Cowen claimed that “you cannot understand the American public intellectual sphere” without a grasp of the close connection between left thinking and depressive tendencies. The same holds in Britain, and not just among public intellectuals. Over the past ten years I have attended demos, protests, drum circles, vigils, Marxist reading groups, union meetings and picket lines. At each of these gatherings there was usually talk of “joy” and “care”, though little of either in evidence.

I know few natural Tories, fewer cradle Labourites, and many born-again, creedal leftwingers. The Tories … have wafted through these crisis years without once discussing therapy with me, or reaching for antidepressants. The same cannot be said of the others.

Once you are aware of this link between private sadness and publicly proclaimed left-wing beliefs, it is difficult ever to forget. New Labour’s behind-closed-doors difficulties begin to make more sense when you understand that many of its leading players were not the happiest warriors …

Of course, the right has its own pathologies. An obdurate lack of empathy and, more recently, a bovine, unconvincing embrace of “man in the street” aspirations …

But there is a reason why the ingenious left-wing German essayist Walter Benjamin railed against “left-wing melancholia” in the Thirties. There is a reason, too, why the most brilliant British left thinker of his generation, Mark Fisher, wrote most insightfully about depression, not capitalism. A movement that longs, in its depths, for a utopia that will never be built is usually going to be unhappy. This constant state of unfulfilled desire, the painful political equivalent of unrequited love, is the birthmark the left can never truly hide.

Don’t let American squalor happen here

This brings me to my last article from UnHerd, ‘Why American cities are squalid’.

I’m not sure that the article explained why this is, although one of the comments to it certainly did — Democrat control:

There aren’t many Republican cities (this chart shows 10 of 51 cities over quarter of a million):

And those are the smaller/less diverse/higher trust ones like Jacksonville, Fl and Anchorage, Alaska.

I will let readers examine the article and the comments for themselves, because they are too depressing to detail here: violent deaths, vagrants urinating on public transport, a homeless person defecating in the middle of a food court and so on.

I worry about Glasgow with its upcoming shooting galleries that will make a declining city even more unsafe.

I worry even more about London, with its high rate of stabbings and homeless in shop doorways.

What could that could lead to? Every American city I know about on the decline started with a high crime rate. From there it went to homelessness, open consumption of drugs in the street and uncontrolled behaviour, e.g. using public spaces as a toilet.

What all these cities had and have in common are Democrat mayors and Democrat-controlled councils.

Conclusion

Which brings me back to Labour. Look at how they cared for the people of Aberfan. They cared nothing about the good people of Aberfan.

Labour won’t care about you, either. They’ll say anything to get in power then ignore their many promises, left unfulfilled.

One could be forgiven for thinking that the world is going down the plughole.

However, an illuminating survey from Our World In Data shows that this is not so. The organisation’s survey, which covers the years from 1820 to 2019, illustrates ‘The World as 100 People’. I found it elsewhere online and felt duty bound to share it. Note that the world’s population has increased seven-fold during that time, from 1.1 billion to 7.7 billion people. Click here for an enlarged version:

This is worth sharing with family and friends, especially children, who are panicked at school and worried about an apocalyptic future.

Also worth noting is Newsweek‘s 2024 Future Possibilities Index which places the United Kingdom at the top of the list of countries best placed to succeed in the coming years. Denmark comes second and the United States comes third. Click here for an enlarged version:

Admittedly, Newsweek‘s survey is along the lines of ‘building back better’, but it covers six economic criteria for success — Exabyte (high tech), Wellbeing, Net Zero, Circular, Bio Growth and Experience.

It is 61 pages long but comes with an executive summary at the beginning.

It is well worth reading, as it is something to be happy about in the doldrums of winter. Enjoy!

Yesterday’s post on Genesis 3:16 was about God’s curse on Eve and all women following her transgression in the Garden of Eden: eating the fruit from the Tree of Knowledge (of Good and Evil).

God’s dual curse involved womankind’s difficulty with childbearing and with husbands (men in general), their two primary relationship groups.

Throughout history, women have suffered with both. There is no real relief in sight, although the effects may be partially mitigated through faith and godly living.

Below are examples of how the curse of Eve has played out in recent times.

Childbirth

On October 19, 2023, the House of Commons held a debate on Baby Awareness Week concerning the alarming levels of infant mortality in NHS trusts.

MPs discussed the findings of Donna Ockenden’s eponymous report on this topic and personal experiences. I hadn’t intended to watch it, but I happened to be preparing dinner at the time. It was shocking.

Most moving was the testimony from Patricia Gibson, the SNP MP for North Ayrshire and Arran, excerpted below (emphases mine):

… I always want to participate in this debate every year because I think it is an important moment—a very difficult moment, but an important one—in the parliamentary calendar. It is significant that the theme this year is the implementation of the findings of the Ockenden report in Britain, because that report was very important. We all remember concerns raised in the past about neonatal services in East Kent and Morecambe Bay, and the focus today on the work undertaken by Donna Ockenden in her maternity review into the care provided by Shrewsbury and Telford Hospital NHS Trust really matters.

Donna Ockenden is currently conducting an investigation into maternity services at Nottingham University Hospitals NHS Trust. That comes in the wake of the fact that in the past, concerns have been raised about a further 21 NHS trusts in England with a mortality rate that is over 10% more than the average for that type of organisation, with higher than expected rates of stillbirth and neonatal death.

To be clear, I do not for one minute suggest that this is not a UK-wide problem, as I know to my personal cost. As the Minister will know, concerns remain that, despite a reduction in stillbirths across the UK, their number is still too high compared with many similar European countries, and there remain significant variations across the UK. Those variations are a concern. We know that they could be, and probably are, exacerbated by the socioeconomic wellbeing of communities. We know that inequality is linked to higher stillbirth rates and poorer outcomes for babies. Of course, the quality of local services is also a huge factor, and this must continue to command our attention.

When the Ockenden report was published earlier this year, it catalogued mistakes and failings compounded by cover-ups. At that time, I remember listening to parents on the news and hearing about what they had been through—the stillbirths they had borne, the destruction it had caused to their lives, the debilitating grief, the lack of answers and the dismissive attitude of those they had trusted to deliver their baby safely after the event. I do not want to again rehearse the nightmare experience I had of stillbirth, but when that report hit the media, every single word that those parents said brought it back to me. I had exactly the same experience when my son, baby Kenneth, was stillborn on 15 October 2009—ironically, Baby Loss Awareness Day.

That stillbirth happened for the same reasons that the parents described in the wake of the Ockenden report. Why are we still repeating the same mistakes again and again? I have a theory about that, which I will move on to in a moment. It was entirely down to poor care and failings and the dismissive attitude I experienced when I presented in clear distress and pain at my due date, suffering from a very extreme form of pre-eclampsia called HELLP syndrome. I remember all of it—particularly when I hear other parents speaking of very similar stories—as though it were yesterday, even though it is now 14 years later. I heard parents describing the same things that happened to me, and I am in despair that this continues to be the case. I hope it is not the case, but I fear that I will hear this again from other parents, because it is not improving. I alluded to that in my intervention on the hon. Member for East Worthing and Shoreham [Tim Loughton, Conservative], and I will come back to it.

While I am on the issue of maternal health, expectant mothers are not being told that when they develop pre-eclampsia, which is often linked to stillbirths, that means they are automatically at greater risk of heart attacks and strokes. Nobody is telling them that they are exposed to this risk. I did not find out until about five years after I came out of hospital. Where is the support? Where is the long-term monitoring of these women? This is another issue I have started raising every year in the baby loss awareness debate. We are talking about maternal care. We should be talking about long-term maternal care and monitoring the health of women who develop pre-eclampsia …

… We are seeing too many maternity failings, and now deep concerns are being raised about Nottingham University Hospitals NHS Trust. I understand that the trust faces a criminal investigation into its maternity failings, so I will not say any more about it. The problem is that when failures happen—and this, for me, is the nub of the matter—as they did in my case at the Southern General in Glasgow, now renamed the Queen Elizabeth University Hospital, lessons continue to be not just unlearned but actively shunned. I feel confident that I am speaking on behalf of so many parents who have gone through similar things when I say that there is active hostility towards questions raised about why the baby died. In my case, I was dismissed, then upon discharge attempts were made to ignore me. Then I was blamed; it was my fault, apparently, because I had missed the viewing of a video about a baby being born—so, obviously, it was my fault that my baby died.

It was then suggested that I had gone mad and what I said could not be relied upon because my memory was not clear. To be absolutely clear, I had not gone mad. I could not afford that luxury, because I was forced to recover and find out what happened to my son. I have witnessed so many other parents being put in that position. It is true that the mother is not always conscious after a stillbirth. Certainly in my case, there was a whole range of medical staff at all levels gathered around me, scratching their heads while my liver ruptured and I almost died alongside my baby. Indeed, my husband was told to say his goodbyes to me, because I was not expected to live. This level of denial, this evasion, this complete inability to admit and recognise that serious mistakes had been made that directly led to the death of my son and almost cost my own life—I know that is the case, because I had to commission two independent reports when nobody in the NHS would help me—is not unusual. That is the problem. That kind of evasion and tactics are straight out of the NHS playbook wherever it happens in the UK, and it is truly awful.

I understand that health boards and health trusts want to cover their backs when things go wrong, but if that is the primary focus—sadly, it appears to be—where is the learning? Perhaps that is why the stillbirth of so many babies could be prevented. If mistakes cannot be admitted when they are made, how can anyone learn from them? I have heard people say in this Chamber today that we do not want to play a blame game. Nobody wants to play a blame game, but everybody is entitled to accountability, and that is what is lacking. We should not need independent reviews. Health boards should be able to look at their practices and procedures, and themselves admit what went wrong. It should not require a third party. Mothers deserve better, fathers deserve better, and our babies certainly deserve better.

Every time I hear of a maternity provision scandal that has led to stillbirths—sadly, I hear it too often—my heart breaks all over again. I know exactly what those parents are facing, continue to face, and must live with for the rest of their lives—a baby stillborn, a much-longed-for child lost, whose stillbirth was entirely preventable.

… Some people talk about workforce pressure, and it has been mentioned today. However, to go back to the point made by the hon. Member for Truro and Falmouth (Cherilyn Mackrory [Conservative]), for me and, I think, many of the parents who have gone through this, the fundamental problem is the wilful refusal to admit when mistakes have happened and to identify what lessons can be learned in order to prevent something similar happening again. To seek to evade responsibility, to make parents feel that the stillbirth of their child is somehow their own fault or, even worse, that everyone should just move on and get on with their lives after the event because these things happen—that is how I was treated, and I know from the testimony I have heard from other parents that that is how parents are often treated—compounds grief that already threatens to overwhelm those affected by such a tragedy. I do not want to hear of another health board or NHS trust that has been found following an independent investigation to have failed parents and babies promising to learn lessons. Those are just words.

When expectant mums present at hospitals, they should be listened to, not made to feel that they are in the way or do not matter. How hospitals engage with parents during pregnancy and after tragedy really matters. I have been banging on about this since I secured my first debate about stillbirth in 2016, and I will not stop banging on about it. I am fearful that things will never truly change in the way that they need to, and that simply piles agony on top of tragedy. I thank Donna Ockenden for her important work, and I know she will continue to be assiduous in these matters in relation to other work that she is currently undertaking, but the health boards and health trusts need to be much more transparent and open with parents when mistakes happen. For all the recommendations of the Ockenden report—there are many, and they are all important—we will continue to see preventable stillbirths unless the culture of cover-ups is ended. When the tragedy of stillbirth strikes, parents need to know why it happened and how it can be prevented from happening again. That is all; a baby cannot be brought back to life, but parents can be given those kinds of reassurances and answers. That is really important to moving on and looking to some kind of future.

It upsets me to say this, but I have absolutely no confidence that lessons were learned in my case, and I know that many parents feel exactly the same. However, I am very pleased to participate again in this annual debate, because these things need to be said, and they need to keep being said until health boards and NHS trusts stop covering up mistakes and have honest conversations when tragedies happen, as sometimes they will. Parents who are bereaved do not want to litigate; they want answers. It is time that NHS trusts and health boards were big enough, smart enough and sensitive enough to understand that. Until mistakes stop being covered up, babies will continue to die, because failures that lead to tragedies will not be remedied or addressed. That is the true scandal of stillbirth, and it is one of the many reasons why Baby Loss Awareness Week is so very important, to shine a light on these awful, preventable deaths for which no one seems to want to be held accountable.

I will just add a postscript here about a cousin of mine who gave birth five times in the 1990s in the United States with the best of private health care.

John MacArthur and Matthew Henry both suggest that godly living will prevent bad experiences in pregnancy and childbirth, but one of my cousins is a devout Catholic and was at the time when she was pregnant. She is middle class and her husband is financially self-sufficient, better off than most men in his social cohort.

Nevertheless, my cousin had horrific third trimesters with each pregnancy resulting in pre-eclampsia. Therefore, I object to men, especially ordained men, intimating that a woman’s godly living will alleviate suffering when she is carrying a child. All I can say about my cousin and other godly women living through those life-threatening situations is that their plight might be a form of sanctification: imposed suffering from on high for greater spiritual refinement. I don’t have an answer.

Fortunately, my cousin recovered and has five healthy adult sons who bring her much happiness.

Men

What more needs to be said about the role of men in women’s lives that hasn’t already been said?

Below are a few recent news items exploring the ongoing war between the sexes.

Divorce

In the Philippines, which is still predominantly Roman Catholic, women want the law changed to allow for divorce. On December 28, 2023, The Telegraph carried the story, ‘Divorce in the Philippines: “My husband beat me over and over — I still can’t legally divorce him”‘:

Ana takes out her phone and scrolls through the grim set of photos. In them, her face is purple and swollen, her lip cut – it wasn’t the first time her husband struck her, but the 48-year-old hopes it will be the last.

“He followed me with a wooden stick and hit me over and over,” says Ana, whose name has been changed. “I remember thinking, this time he’s going to kill me … I shouted for help but I don’t think anyone heard. So I ran.”

As she sat in hospital later that night in August, Ana came to a stark realisation: after 19 years, two daughters, and plenty of violence, she wanted a divorce.

There’s only one problem: in the Philippines, it’s illegal.

“I don’t want him in my life anymore,” Ana says. “Separation isn’t enough, I cannot say that is freedom. It would be like a bird in a cage – you cannot fly wherever you go, because you are married so you are linked … But in the Philippines, the law doesn’t stand with me.”

The southeast Asian country is the only place outside the Vatican which prohibits divorce, trapping thousands of people in marriages that are loveless at best, abusive and exploitative at worst.

But now, as new legislation creeps through Congress, there are mounting hopes that change may finally be on the horizon in this conservative, Catholic country …

“I’m a Catholic, I go to church, but I also believe it’s my human right to become divorced. I want to try to convince others of that too,” says Ana, between bites of a homemade custard tart.

“In the meantime, I’m not giving up on love. Where there’s life, there’s love.”

There was a time when divorce was allowed for everyone in the Philippines, but that all changed with independence:

Though banned during the Spanish colonial era, divorce on the grounds of adultery or concubinage was legalised in 1917 under American occupation, and further expanded by the Japanese when they took control during World War Two.

But in 1950, when the newly independent country’s Civil Code came into effect, these changes were repealed.

Today, only Muslims can obtain a divorce in the Philippines:

Today, most couples – bar Muslims, who are covered by Sharia laws which allow for divorce – have two options: legal separation, which doesn’t end a marriage but allows people to split their assets; or annulment, which voids the nuptials and enables individuals to remarry, as the union never existed in the eyes of the law.

Every other couple has to jump through highly challenging legal and financial hoops to obtain some sort of separation:

… the grounds are narrow, the process bureaucratic, the courts stretched and the costs extortionate.

Gaining an annulment, for instance, involves proving someone was forced into a marriage or mentally unsound on their wedding day. Brookman, a solicitors firm specialising in divorce, warns a “large amount of evidence” is required – and the costs often spiral to “roughly the average salary” in the Philippines.

“Some say it’s an anti-poor, pro-rich process because it takes quite a bit of effort, resources and money to gain an annulment,” says Carlos Conde, a senior researcher at Human Rights Watch. “People who have access to lawyers can go through the process, but for the majority of poor Filipinos that’s just not an option. And so they stay in toxic relationships.”

Even where people do have the funds, the outcome is far from guaranteed. Take Stella Sibonga. The 46-year-old filed for an annulment in 2013, keen to give marriage a second chance with her long-term boyfriend. Five years prior, she left a decade-long union she described as “traumatic and miserable”.

Yet, 300,000 pesos (roughly £4,300) and 10 years later, Ms Sibonga remains married to the “wrong man”.

“I have no idea when I’ll get a final verdict,” she says. “In the meantime, people say I’m living in sin with my boyfriend, they judge me for it… Really, it’s a nightmare.”

Catholic clergy are firmly opposed to a divorce law in the Philippines, and legislators tread carefully:

“We remain steadfast in our position that divorce will never be pro-family, pro-children, and pro-marriage,” Father Jerome Secillano, the executive secretary of the Catholic Bishops’ Conference of the Philippines, said in September. He has previously criticised “legislators who rather focus on breaking marriages and the family rather than fixing them”.

The church has huge influence in the Philippines, where nearly 80 per cent of the population is Catholic.

“The main difficulty is the opposition to the divorce bill by this powerful block led by the Catholic church and religious fundamental groups,” says Mr Conde. “Many legislators are not keen to butt heads with or offend the church … it is tough to do battle against them.”

I understand the clergy’s point, but some things just cannot be fixed.

The country’s 2012 reproductive health bill still hadn’t been implemented in 2022. The Church had blocked it with religious threats against legislators:

The fight to ensure access to contraception was a case in point. After more than a decade of gruelling debate, negotiations and lobbying, the Reproductive Health (RH) law finally passed in 2012 – only for full implementation to be blocked for years amid legal challenges from the church.

In 2022, government figures suggested 42 per cent of women still had an unmet need for family planning, meaning they wanted to use contraception but were not able to access it. Over half of pregnancies in the Philippines are “unintended”.

“The Catholic hierarchy in the country was vociferously against the RH bill, so much so that it threatened the authors of the measure with excommunication and defeat at the polls,” says Mr Lagman [Edcel Lagman, congressman and author of the divorce bill in the House of Representatives]. But he thinks the fight for divorce could be easier.

“Although representatives of the church have stated that as an institution, it is strongly against the measure, I think that this time around it is not as vehement in its opposition,” he adds. “All Catholic countries worldwide, except for the Philippines, have already legalised absolute divorce. This is a recognition that divorce does not violate Catholic dogma.”

This is the state of play with the proposed divorce bill:

“Now, for the first time, both the House and the Senate have approved their respective measures at the committee level,” Edcel Lagman, congressman and author of the divorce bill in the House of Representatives, told the Telegraph.

“I am still very optimistic that the present Congress will pass the divorce bill and President Ferdinand Marcos Jr, who has said before that he is pro-divorce, will sign the measure into law… The Philippines needs a divorce law, and we need it now – it is not some dangerous spectre that we must fight against.”

More and more people here agree. In 2005, a survey by the polling company Social Weather Stations found 43 per cent of Filipinos supported legalising divorce “for irreconcilably separated couples,” while 45 per cent disagreed. This had shifted to 53 per cent in favour and 32 per cent against in the same survey in 2017.

We shall see what happens in 2024.

Virtual reality

However, a woman does not need to have to come into actual physical contact with a man in order to feel abused. Over the Christmas period, allegations of rape came to light from a girl experiencing virtual reality in the gaming world.

The story was all over media outlets. On January 2, 2024, The Times reported, ‘Police investigate “virtual rape” of girl in metaverse game’: